Wan 2.5 vs Wan 2.2: Which Wan AI API Is Better for Production in 2026?

PiAPI Breaks Down the Decision!

In September 2025, Alibaba launched Wan 2.5, its most advanced multimodal video model to date. It's a significant leap forward, but that doesn't automatically mean it's the right choice for everyone.

If you're building AI video workflows today, you're probably wondering: should I upgrade from Wan 2.2 to Wan 2.5? Both models are available through PiAPI, but they're built for different use cases - and the answer depends on what matters most to your project.

In this breakdown, PiAPI compares Wan 2.2 API and Wan 2.5 API across four areas: cinematic-level aesthetics, instruction adherence, smoother motion generation, and native audio synchronization, which the official Wan 2.5 documentation highlights as key upgrades. For each, we’ll show side-by-side videos generated from the same prompt, so you can quickly see where Wan 2.5 really pulls ahead - and where Wan 2.2 is still more than good enough for AI video generation.

The Final Verdict: Wan 2.5 or Wan 2.2

Wan 2.5 is the better choice for production-grade video generation when cinematic visuals, smooth motion, and native audio matter. Wan 2.2 remains a strong, cost-efficient option for prompt-driven drafts and internal testing. In practice, teams benefit most from a hybrid workflow - iterating with Wan 2.2 and finalizing with Wan 2.5.

Key Features of Wan 2.5 API

The Wan 2.5 API is built as the cinematic, production-ready option, with four capabilities that matter most for developers and content creators.

- Cinematic-Level Aesthetics

Wan 2.5 produces richer lighting, composition, and colour grading. Shots feel more structured and stable, making it easier to ship client-facing work without heavy post-processing.

- Strong Instruction Adherence

Compared to Wan 2.2, Wan 2.5 follows complex prompts more reliably - camera moves, character actions, outfit details, and scene constraints are more likely to appear exactly as described. That means fewer retries and more predictable output for your pipelines.

- Smoother Motion Generation

Wan 2.5 reduces jittery camera moves and awkward character animation. Action, tracking shots, and transitions play back with more natural motion, which is critical for ads, product demos, and dynamic social content.

- Native Audio Synchronization

With upgraded audio-visual alignment, the Wan 2.5 API keeps voices, sound effects, and music in sync with what's on screen. Lip movements match speech more closely and cuts land nearer to the beat, so you spend less time fixing timing and an editor. This is a new feature not available on the Wan 2.2 T2V model.

Evaluation Framework

For the comparison between Wan 2.2 and Wan 2.5, we'll be using the text-to-video (T2V) feature of the two models. Regarding the evaluation framework, we have taken the framework that was adopted in our previous blog comparing Luma Dream Machines 1.0 vs 1.5. For more information, feel free to read our previous blog.

Video Comparison: Wan 2.2 API vs Wan 2.5 API

For each dimension, we use the same text prompt on both models and then score the outputs using our adapted T2V framework. Below is how Wan 2.5 and Wan 2.2 behave side by side.

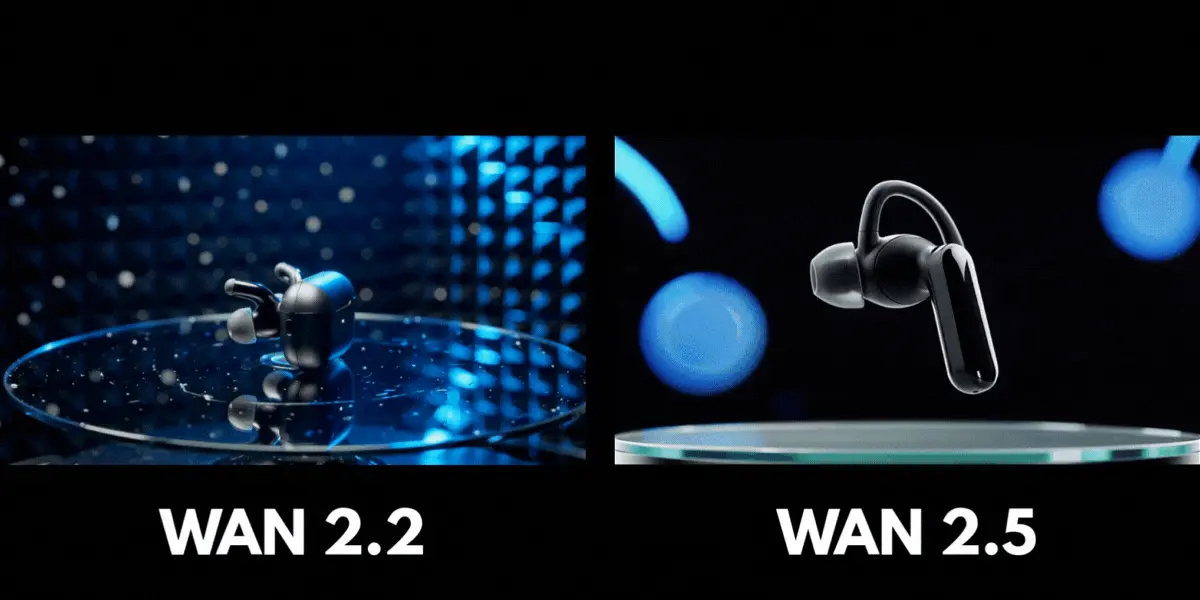

Example 1: Cinematic-Level Aesthetics

Think brand films, product launches, and hero creatives where you want every frame to look like a polished commercial.

Both videos do a great job with realism, with light reflecting off the earbuds to create a gloss that feels close to real-world lighting. They also show dynamic movement, as the rotation of the product showcase is super smooth with no visible jump cuts. In terms of prompt adherence, both generated videos follow the prompt reasonably well, with soft rim lighting and neon blue highlights in the background. However, the video generated by Wan 2.2 was not precise enough and did not follow the prompt of a single wireless earbud, instead showing two earbuds with a case. For Wan 2.5, we see that it was not precise enough to generate a reflective glass surface, unlike Wan 2.2. Overall, we think that Wan 2.5 did a better job in terms of cinematic level aesthetics.

Example 2: Strong Instruction Adherence

Perfect for multi-character scenes and story-driven videos where outfits, actions, and camera moves all need to follow a tightly written brief.

For realism, Wan 2.2 shows a very saturated colour tone in elements like the table, which reduces the natural feel of the scene, while Wan 2.5 does a much better job of capturing realistic lighting and colour. For dynamic movement, both videos perform very well - human motion and camera motion are smooth with no visible jump cuts. In terms of prompt adherence, both models perform reasonably well, but Wan 2.5 falls short in following the specified camera movement accurately. Overall, we feel that Wan 2.2 performed better than Wan 2.5 for this example as Wan 2.2 was more precise in prompt following compared to Wan 2.5.

Example 3: Smoother Motion Generation

Great for action shots, sports clips, and dynamic product sequences where jittery movement would instantly break immersion.

For realism, Wan 2.2 performs exceptionally well, especially in how it renders the shadows of the moving person and skateboard, while the shadows in Wan 2.5 are slightly distorted. Both videos handle dynamic movement very well. The motions are super smooth with no visible jump cuts. In terms of prompt adherence, Wan 2.2 is not precise in the skateboard movement, missing the kickflip, whereas Wan 2.5 shows better adherence to the prompt. Overall, Wan 2.5 performed better for this example as we felt that the motion generation was smoother compared to Wan 2.2.

Example 4: Native Audio Synchronization

Made for explainer videos, talking-head content, and music-led edits. For this example, click on the generated videos and see where Wan 2.5 truly outperforms Wan 2.2 with its ability to generate video with native audio.

Wan 2.2: Generated Video without Audio

Wan 2.5: Generated Video with Native Audio Synchronization

Prompt: A presenter standing in front of a large screen with simple charts, speaking directly to the camera and occasionally gesturing with their hands. The lip movements should match the voiceover of a friendly product explainer, with subtle camera breathing and a soft background track.

For realism, both models perform exceptionally well. The lighting and details on the face of the presenters are crisp and of high quality. For dynamic movements, both models perform really well , from the hand gestures to facial movements. The motions are super smooth with no visible jump cuts. In terms of prompt adherence, for other details such as the charts and soft background, both models adhered to the prompt. However, only Wan 2.5 produced an audio along with video generation while Wan 2.2 did not have an audio generation along with its generated video. Overall, Wan 2.5 clearly stood out as the winner for this example due to its native audio synchronization feature that Wan 2.2 lacks.

Conclusion: Choosing the Right Wan AI API for Production

Based on the four examples provided above, we can see that there is no single “winner” across every dimension - but each model has a clear role in a production workflow. Wan 2.5 consistently shines in cinematic-level aesthetics, smoother motion generation, and native audio synchronization, making it the stronger choice for hero content, motion-heavy shots, and talking-head videos where polish really matters.

At the same time, Wan 2.2 still holds its own, especially in prompt adherence and realism, and remains a very capable T2V model for many everyday use cases. If you’re generating drafts, internal concept tests, or don’t need audio-visual sync, Wan 2.2 can be the more cost-efficient option without sacrificing too much quality.

In practice, the best approach is often a hybrid strategy: explore ideas and iterate quickly with the Wan 2.2 API, then promote your best prompts to the Wan 2.5 API for final, production-grade renders—especially when you care about cinematic visuals, smooth motion, or native audio. With PiAPI exposing both models through a single Wan AI API integration, it’s easy to A/B test, compare outputs side by side, and choose the right model for each video generation job in your pipeline.

Unlock the power of 20+ AI models with PiAPI — image, video, chat, music, and more. Sign up today and start building smarter, faster and at scale.